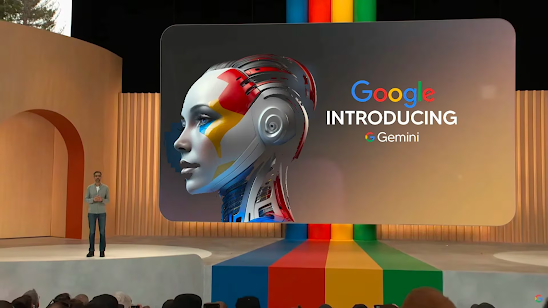

Fake Faces of AI: Google's Gemini Demo Sparks Controversy

Gemini is Google's latest and most powerful AI model, officially launched on December 6, 2023. It's a multimodal language model, meaning it can process and generate text, translate languages, write different kinds of creative content, and even understand and interpret images, all in one. Here's a quick rundown of what we know about Gemini so far:

Capabilities:

- Multimodal: As mentioned before, Gemini can handle not just text but also images and code. This makes it incredibly versatile and opens up a wide range of potential applications.

- Powerful: In benchmark tests, Gemini has outperformed other popular language models like GPT-3.5 in several tasks, showcasing its impressive capabilities.

- Conversational: Gemini excels at carrying on natural and engaging conversations, making it ideal for virtual assistants, chatbots, and other interactive AI applications.

- Creative: Gemini can generate different creative text formats, like poems, code, scripts, musical pieces, emails, letters, etc. It's a valuable tool for writers, artists, and anyone who wants to explore their creative side.

- Safe and responsible: Google has emphasized the importance of responsible AI development with Gemini. The model has undergone extensive safety evaluations to mitigate potential biases and ensure its outputs are not harmful.

Availability:

Currently, Gemini is available through Google Cloud's Vertex AI platform as a limited preview. This means access is restricted to select users and developers, but it's expected to become more widely available in the future.

Potential applications:

The potential applications of Gemini are vast and still being explored. Here are a few examples:

- Enhanced search engines: Gemini could power search engines that understand not just keywords but also the context and intent behind queries, leading to more relevant and accurate results.

- Personalized education: Gemini could create personalized learning experiences for students, adapting to their individual needs and pace.

- Improved customer service: AI-powered chatbots powered by Gemini could provide more natural and helpful customer service interactions.

- Scientific research: Gemini could be used to analyze large datasets of text and images, helping scientists make new discoveries and solve complex problems.

- Creative content creation: Gemini could assist writers, artists, and other creatives in generating new ideas and content.

Yes, Google has been facing quite a bit of controversy over the edited demo video for its newly launched AI model, Gemini. The video, released in early December 2023, showcased Gemini as having several seemingly advanced capabilities, including:

- Real-time understanding and responding to visual cues: In the video, a user shows Gemini a picture of a cat, and Gemini instantly generates a poem about it.

- Natural and engaging conversation: The video depicts Gemini carrying on a smooth and witty conversation with a user, answering questions and even cracking jokes.

- Multimodal processing: Gemini appears to seamlessly handle not just text but also images and code in the video.

However, it soon came to light that the video was not an accurate representation of Gemini's actual capabilities. Here's what we know now:

- The video was heavily edited: Google admitted to editing the video for "presentation clarity," which essentially means stitching together multiple successful interactions, omitting unsuccessful attempts, and likely editing the timeline for smoother flow. This created the misleading impression that Gemini could perform flawlessly and in real-time, which is not the case.

- Gemini's real-time capabilities are still under development: While Gemini is indeed a powerful language model, its ability to understand and respond to visual cues in real-time is still in its early stages. The video demonstrations were likely carefully curated scenarios that don't reflect the model's typical performance.

- Multimodal processing is still a challenge: Although Gemini can handle different data formats, integrating them seamlessly and generating outputs that combine all the information effectively is not yet a mastered skill for AI models.

This revelation has led to criticism from various corners, with some experts accusing Google of misleading the public about Gemini's capabilities. Others argue that while the video was undoubtedly misleading, it's important to remember that AI technology is still evolving, and showcasing advancements through polished demonstrations is not inherently wrong.